Difference between revisions of "Gen-2 Network Requirements"

Sven.fischer (talk | contribs) |

|||

| Line 9: | Line 9: | ||

== ICR quality of connectivity self-enforcing == | == ICR quality of connectivity self-enforcing == | ||

| − | Each ICR Gen2 node requires stable IPv6 connectivity to the Internet in order to execute workload. This document does not set any quality requirements in terms of required minimum bandwidth, maximum round trip time and maximum jitter (RTT variation) to any specific peers. | + | Each ICR Gen2 node requires stable IPv6 connectivity to the Internet in order to execute workload. This document does not set any quality requirements in terms of required minimum bandwidth, maximum round trip time and maximum jitter (RTT variation) to any specific peers. This page provides a high-level description of the communication patterns that can be expected to originate and terminate at correctly behaving ICR nodes and defers to the ICR ability to dynamically remove any node that does not meet connectivity requirements for the node-specific workload from the subnet. This mechanism provides a way to protect ICR from misbehaving or broken nodes, network failures and even large-scale Internet outages. Moreover this mechanism brings an incentive for the ICR Node providers to monitor availability and performance of the nodes, plan for network capacity upgrades in time and provision enough capacity in general to avoid node removal from the subnet that would ultimately transform to a financial incentive. |

== ICR communication patterns == | == ICR communication patterns == | ||

| Line 23: | Line 23: | ||

Use [https://dashboard.mainnet.dfinity.systems/network/mainnet/topology <span class="underline">https://dashboard.mainnet.dfinity.systems/network/mainnet/topology</span>] or mainnet-op tool to list IP addresses in these categories. | Use [https://dashboard.mainnet.dfinity.systems/network/mainnet/topology <span class="underline">https://dashboard.mainnet.dfinity.systems/network/mainnet/topology</span>] or mainnet-op tool to list IP addresses in these categories. | ||

| − | + | Node providers are not encouraged to deploy static ACLs to limit the source and destination IP addresses and source and destination TCP ports in the TCP segments coming to and from the ICR nodes. Should it prove necessary to apply ACLs and/or RTBH or Flowspec based filters in order to mitigate an DoS attack it is recommended to use minimum required restrictions and for strictly limited time. | |

== ICR LAN == | == ICR LAN == | ||

| Line 31: | Line 31: | ||

Each ICR node has at least one or more Ethernet NIC ports that can be used by the OS running on the node. The existing nodes (in mid-2022) have either two 1000BASE-T ports or two 10GBASE-T or both. | Each ICR node has at least one or more Ethernet NIC ports that can be used by the OS running on the node. The existing nodes (in mid-2022) have either two 1000BASE-T ports or two 10GBASE-T or both. | ||

| − | + | Node providers are not prohibited or discouraged to deploy any other standard including, but not limited to, 25GBASE-CR, 25GBASE-T, 50GBASE-CR or 100GBASE-CR4. | |

At this moment the HostOS support only one LAN topology: | At this moment the HostOS support only one LAN topology: | ||

| Line 38: | Line 38: | ||

<li><blockquote><p>One Ethernet link to the node provider network forming L2 connectivity for the IPv6 – topology shown on Schematic 1.</p></blockquote></li></ul> | <li><blockquote><p>One Ethernet link to the node provider network forming L2 connectivity for the IPv6 – topology shown on Schematic 1.</p></blockquote></li></ul> | ||

| − | + | Future support of two link aggregation variants is envisioned: | |

<ul> | <ul> | ||

| Line 44: | Line 44: | ||

<li><blockquote><p>Link aggregation based on '''LACP''' (IEEE 802.3ad) supported by the nodes with fully redundant topology shown in Schematics 3.</p></blockquote></li></ul> | <li><blockquote><p>Link aggregation based on '''LACP''' (IEEE 802.3ad) supported by the nodes with fully redundant topology shown in Schematics 3.</p></blockquote></li></ul> | ||

| − | + | Node Providers are encouraged to build redundant topology (Schematic 2 or Schematic 3) and disable one of the redundant switches in the current phase. | |

[[File:Image2.png|600px|Screenshot]] | [[File:Image2.png|600px|Screenshot]] | ||

| Line 66: | Line 66: | ||

The default GW must transmit proper replies to Neighbor Discovery requests - Neighbor Solicitation (Type 135) messages and the GW must fulfill all the requirements for the NDP to work – most notably the GW must support IPv6 multicast, report correct multicast group membership over MLD and the L2 segment must conform to all requirements for the IPv6 multicast to work properly according to RFC4861. | The default GW must transmit proper replies to Neighbor Discovery requests - Neighbor Solicitation (Type 135) messages and the GW must fulfill all the requirements for the NDP to work – most notably the GW must support IPv6 multicast, report correct multicast group membership over MLD and the L2 segment must conform to all requirements for the IPv6 multicast to work properly according to RFC4861. | ||

| − | + | Using IPv6-enabled first-hop redundancy protocols are encouraged when applicable and under assumption that the requirements above are fulfilled. | |

== Server IPMI / iDRAC connections == | == Server IPMI / iDRAC connections == | ||

| Line 78: | Line 78: | ||

=== Server racks === | === Server racks === | ||

| − | + | Building ICR in one or more neighboring 19’’ rack(s) is recommended. | |

=== Servers === | === Servers === | ||

| Line 84: | Line 84: | ||

Server specification is out of the scope of this document. | Server specification is out of the scope of this document. | ||

| − | For the server LAN connectivity | + | For the server LAN connectivity it is recommended to use dual-port 10GBASE-T NICs. Please see our reference design [3,4] for details. |

| − | + | No recommendations are provided regarding connecting iDRAC / IPMI / BMC ports. In our reference design [3,4] an optional separate management switch is used for iDRAC / IPMI ports and the servers are provisioned with an iDRAC Enterprise license (or the equivalent for the brands). | |

=== ToR Switches === | === ToR Switches === | ||

| − | + | It is recommended to deploy data-center grade 1U 48-port 10GBASE-T switches with at least 2 40GBASE-* or 100GBASE-* ports. | |

In case of the future redundant LACP-based topology the switches have to be deployed in pairs that support multi-chassis LAG (MC-LAG / vPC in Cisco terminology / VLT in Dell OS10 terminology). | In case of the future redundant LACP-based topology the switches have to be deployed in pairs that support multi-chassis LAG (MC-LAG / vPC in Cisco terminology / VLT in Dell OS10 terminology). | ||

| − | + | There is no provision of any specific recommendation for the network equipment vendor and type, however it is discouraged to use low-end and SOHO units. | |

| − | In the reference design | + | In the reference design '''''Dell EMC S4148T-ON''''' switch(es) are used with the latest stable version of OS10 for LAN and '''''Dell EMC S3048-ON''''' for connecting iDRAC / IPMI ports into the management network. |

=== Cables and cable management === | === Cables and cable management === | ||

| − | + | Cat6 cables are recommended for 10GBASE-T links and all other TP connections in the rack. | |

The reference design contains the BoM and the racking instructions [1,2] that list the specific cable managers with the self-retracting Cat6 cables in '''''Patchbox''''' cassettes. | The reference design contains the BoM and the racking instructions [1,2] that list the specific cable managers with the self-retracting Cat6 cables in '''''Patchbox''''' cassettes. | ||

| − | + | No specific recommendations are provided for the Ethernet connections that require other media than UTP. It is recommended to consult the switch vendors for the detailed specifications of the required transceivers. | |

=== Uplinks and links between the switches === | === Uplinks and links between the switches === | ||

| − | + | It is recommended to provision at least one 10GBASE-* uplink (connection to the ISP that provides the Internet connectivity) for the ICR. However, there are no specific recommendations regarding the available bandwidth and connection quality and this question is deferred to the ''ICR quality of connectivity self-enforcing'' mechanism described above. | |

| − | + | It is recommended to deploy redundant links between the switches and using LAG to aggregate bandwidth and for redundancy. The bandwidth of each connection between switches should be greater or equal to the bandwidth of the uplink. | |

| − | + | It is recommended to provision redundant uplinks and terminating them on multiple switches when applicable (in the redundant network topology). There are no particular recommendations regarding the use of any L2 redundancy protocols (STP, RSTP, LACP-based LAGs etc.) on the ISP - ICR boundary. | |

=== L3 configuration === | === L3 configuration === | ||

| − | + | It is recommended to configure SLAAC for the /64 ICR LAN IPv6 prefix even though it is not required for ICR nodes to operate at this time. | |

| − | + | It is recommended to assign or reserve one /29 IPv4 prefix to the ICR LAN subnet for future use. | |

=== Performance and availability monitoring === | === Performance and availability monitoring === | ||

| − | + | It is recommended to deploy Streaming Telemetry-based (gRPC) performance monitoring of the switches including, but not limited to the port traffic volume, number of packets per second, number of packet drops and Tx and Rx errors on each switch port.. For optimal operation monitoring, capacity planning and analysis of the issues the readout period shall be shorter than 30 seconds. Moreover It is recommended to monitor the overall switch health (fans, PSUs etc.), TCAM usage, control plane load and other relevant parameters recommended by the switch vendor. | |

| − | + | It is also recommended to set up a remote syslog server for archiving and analyzing the logs from the switches. | |

| − | And | + | And it is strongly recommended to use availability monitoring tools to continuously check the health of the entire infrastructure including the uplinks, servers, switches, the iDRAC / IPMI BMC interfaces and switch management interfaces. |

Latest revision as of 10:12, 27 February 2023

ICR Gen2 connectivity requirements

This is a work-in-progress

ICR (IC Rack) definition

ICR Gen2 shall consist of a varying number of servers that serve as IC nodes in one or more groups sharing a 19’’ rack or being placed within reach of the LAN. The servers shall have at least one NIC port compatible with the selected LAN type.

ICR quality of connectivity self-enforcing

Each ICR Gen2 node requires stable IPv6 connectivity to the Internet in order to execute workload. This document does not set any quality requirements in terms of required minimum bandwidth, maximum round trip time and maximum jitter (RTT variation) to any specific peers. This page provides a high-level description of the communication patterns that can be expected to originate and terminate at correctly behaving ICR nodes and defers to the ICR ability to dynamically remove any node that does not meet connectivity requirements for the node-specific workload from the subnet. This mechanism provides a way to protect ICR from misbehaving or broken nodes, network failures and even large-scale Internet outages. Moreover this mechanism brings an incentive for the ICR Node providers to monitor availability and performance of the nodes, plan for network capacity upgrades in time and provision enough capacity in general to avoid node removal from the subnet that would ultimately transform to a financial incentive.

ICR communication patterns

Each active ICR node operates as a part of a specific subnet. Associations of the nodes and subnets can be obtained in machine readable form from the registry canister.

Each node needs to be able to set up a TCP sessions over IPv6 to and accept a TCP session over IPv6 from the following peers:

NNS nodes

All other nodes that share the same subnet

Use https://dashboard.mainnet.dfinity.systems/network/mainnet/topology or mainnet-op tool to list IP addresses in these categories.

Node providers are not encouraged to deploy static ACLs to limit the source and destination IP addresses and source and destination TCP ports in the TCP segments coming to and from the ICR nodes. Should it prove necessary to apply ACLs and/or RTBH or Flowspec based filters in order to mitigate an DoS attack it is recommended to use minimum required restrictions and for strictly limited time.

ICR LAN

L1 + 2

Each ICR node has at least one or more Ethernet NIC ports that can be used by the OS running on the node. The existing nodes (in mid-2022) have either two 1000BASE-T ports or two 10GBASE-T or both.

Node providers are not prohibited or discouraged to deploy any other standard including, but not limited to, 25GBASE-CR, 25GBASE-T, 50GBASE-CR or 100GBASE-CR4.

At this moment the HostOS support only one LAN topology:

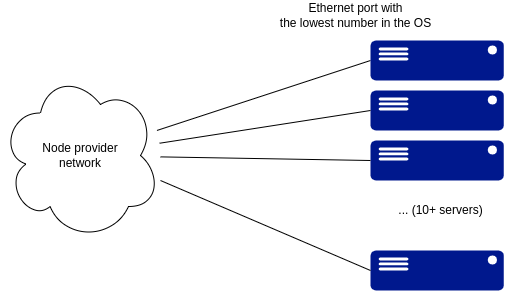

One Ethernet link to the node provider network forming L2 connectivity for the IPv6 – topology shown on Schematic 1.

Future support of two link aggregation variants is envisioned:

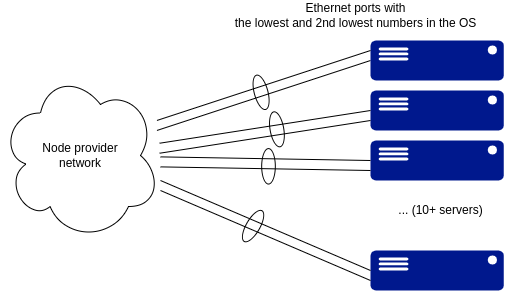

Two Ethernet links to the node provider network with basic Linux bonding driver in mode 1 – Active-backup mode. This configuration requires both ports for each server to be configured as access ports on the switch and NOT as a Port Channel or LAG. Schematics 2 shows the physical topology.

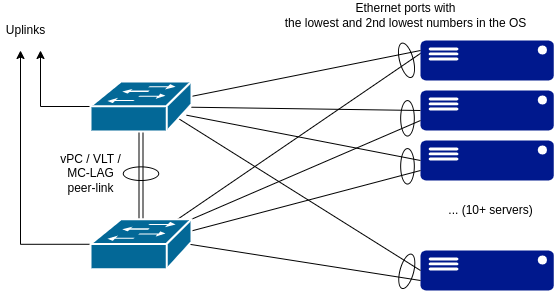

Link aggregation based on LACP (IEEE 802.3ad) supported by the nodes with fully redundant topology shown in Schematics 3.

Node Providers are encouraged to build redundant topology (Schematic 2 or Schematic 3) and disable one of the redundant switches in the current phase.

Schematics 1: Single-Ethernet L2

Schematics 2: Dual-Ethernet L2

Schematics 3: Dual-Ethernet L2 with redundant switches

L3

IPv6 connectivity on top of L2 shall be provided as an IPv6 /64 subnet terminated to the L2 segment with one default GW address that will be configured to the ICR nodes as a part of the OS image configuration.

The default GW must transmit proper replies to Neighbor Discovery requests - Neighbor Solicitation (Type 135) messages and the GW must fulfill all the requirements for the NDP to work – most notably the GW must support IPv6 multicast, report correct multicast group membership over MLD and the L2 segment must conform to all requirements for the IPv6 multicast to work properly according to RFC4861.

Using IPv6-enabled first-hop redundancy protocols are encouraged when applicable and under assumption that the requirements above are fulfilled.

Server IPMI / iDRAC connections

This document does not set any requirements for the IPMI or iDRAC connectivity. Connecting and using the remote monitoring and management is the responsibility of the node provider.

ICR Gen2 networking recommendations

Building blocks

Server racks

Building ICR in one or more neighboring 19’’ rack(s) is recommended.

Servers

Server specification is out of the scope of this document.

For the server LAN connectivity it is recommended to use dual-port 10GBASE-T NICs. Please see our reference design [3,4] for details.

No recommendations are provided regarding connecting iDRAC / IPMI / BMC ports. In our reference design [3,4] an optional separate management switch is used for iDRAC / IPMI ports and the servers are provisioned with an iDRAC Enterprise license (or the equivalent for the brands).

ToR Switches

It is recommended to deploy data-center grade 1U 48-port 10GBASE-T switches with at least 2 40GBASE-* or 100GBASE-* ports.

In case of the future redundant LACP-based topology the switches have to be deployed in pairs that support multi-chassis LAG (MC-LAG / vPC in Cisco terminology / VLT in Dell OS10 terminology).

There is no provision of any specific recommendation for the network equipment vendor and type, however it is discouraged to use low-end and SOHO units.

In the reference design Dell EMC S4148T-ON switch(es) are used with the latest stable version of OS10 for LAN and Dell EMC S3048-ON for connecting iDRAC / IPMI ports into the management network.

Cables and cable management

Cat6 cables are recommended for 10GBASE-T links and all other TP connections in the rack.

The reference design contains the BoM and the racking instructions [1,2] that list the specific cable managers with the self-retracting Cat6 cables in Patchbox cassettes.

No specific recommendations are provided for the Ethernet connections that require other media than UTP. It is recommended to consult the switch vendors for the detailed specifications of the required transceivers.

Uplinks and links between the switches

It is recommended to provision at least one 10GBASE-* uplink (connection to the ISP that provides the Internet connectivity) for the ICR. However, there are no specific recommendations regarding the available bandwidth and connection quality and this question is deferred to the ICR quality of connectivity self-enforcing mechanism described above.

It is recommended to deploy redundant links between the switches and using LAG to aggregate bandwidth and for redundancy. The bandwidth of each connection between switches should be greater or equal to the bandwidth of the uplink.

It is recommended to provision redundant uplinks and terminating them on multiple switches when applicable (in the redundant network topology). There are no particular recommendations regarding the use of any L2 redundancy protocols (STP, RSTP, LACP-based LAGs etc.) on the ISP - ICR boundary.

L3 configuration

It is recommended to configure SLAAC for the /64 ICR LAN IPv6 prefix even though it is not required for ICR nodes to operate at this time.

It is recommended to assign or reserve one /29 IPv4 prefix to the ICR LAN subnet for future use.

Performance and availability monitoring

It is recommended to deploy Streaming Telemetry-based (gRPC) performance monitoring of the switches including, but not limited to the port traffic volume, number of packets per second, number of packet drops and Tx and Rx errors on each switch port.. For optimal operation monitoring, capacity planning and analysis of the issues the readout period shall be shorter than 30 seconds. Moreover It is recommended to monitor the overall switch health (fans, PSUs etc.), TCAM usage, control plane load and other relevant parameters recommended by the switch vendor.

It is also recommended to set up a remote syslog server for archiving and analyzing the logs from the switches.

And it is strongly recommended to use availability monitoring tools to continuously check the health of the entire infrastructure including the uplinks, servers, switches, the iDRAC / IPMI BMC interfaces and switch management interfaces.